Key Takeaways

- AI has been advancing at a rapid pace, but it could be beginning to slow.

- The current AI scaling law is reportedly showing a diminishing return on improvement.

- A new AI scaling law is beginning to emerge, and it will give LLMs more time to think.

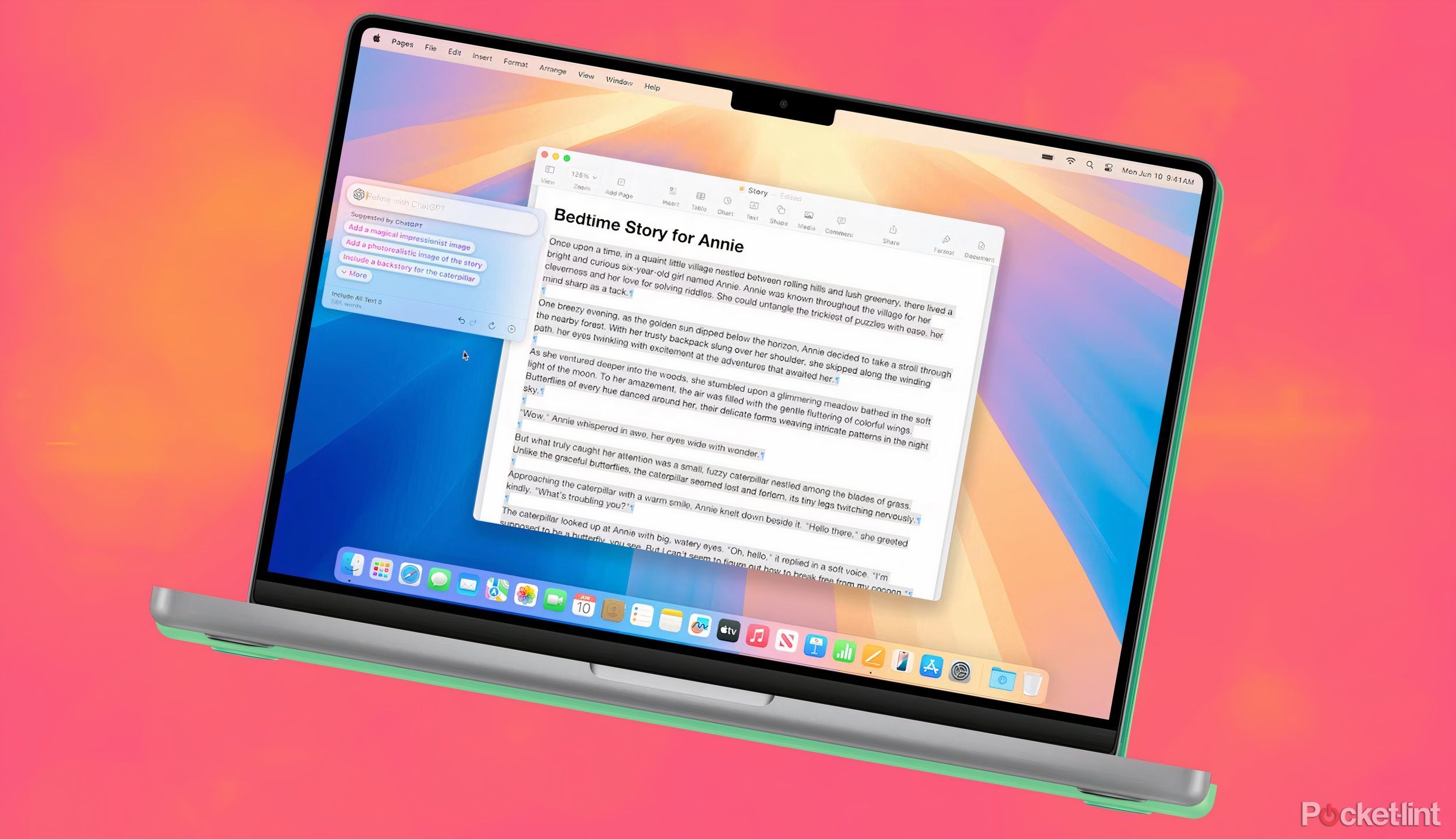

Advancements in AI technology have been moving at what feels like light speed since the AI boom really started to kick off a few years ago. But it seems now the rapid progress in AI technology and large language models (LLMs) could be beginning to slow.

Recent media reports suggest that AI companies are improving their AI models and capabilities slower than they have in the past. Why? It has to do with the approach that AI companies, like OpenAI, have been using to advance their large LLMs since the AI race began, and that same approach is now showing diminishing returns. That approach all has to do with AI scaling laws.

Related

Windows 365 Link feels like Microsoft’s version of the Mac mini

Microsoft has announced the Windows 365 Link, a cloud-based mini PC aimed at businesses.

What are AI scaling laws?

AI companies rely on scale to build and improve LLMs

AI scaling laws are not something you’ll find in your high school law textbook. It’s not a law of nature, physics or government. AI scaling laws are the methods and observations AI labs use to increase the size and functionality of their LLMs. These AI models improve and get bigger as it gets more computing power and data to train on.

But now it seems AI companies are finding out that you can’t just give LLMs more computing power and data and expect them to turn into a super-intelligent AI god. A recent Bloomberg report says OpenAI, Google and Microsoft’s AI models are struggling to improve its LLMs at the speedy rate it once did.

The solution to this problem was hinted at during Microsoft’s Ignite 2024 event recently, when Microsoft CEO Satya Nadella said “we are seeing the emergence of a new scaling law.”

Related

AI safeguards can’t keep up with the pace of innovation

A viral fake image of a hurricane “victim” illustrates just how far tech needs to grow to protect against the misuse of AI.

What could this new AI scaling law be? “Test-time compute” is a possibility (via TechCrunch). This new law essentially gives LLMs more computing power when a prompt has been entered, rather than focusing on all of its power during its pre-training phase. It gives AI time to think. “We thus see a new form of computational scaling appear. Not just more training data and larger models but more time spent ‘thinking’ about answers,” AI researcher Yoshua Benjio said in a Financial Times op-ed.

AI advancement may be slowing down and running out of gas at the moment, but if this new scaling law suggests anything, it could be that another AI boom could be around the corner. No matter what happens, tech companies around the world will surely continue to invest and push the boundaries and capabilities of AI.

Related

Google being forced to sell off Chrome would be a major win for everybody

Google might be forced to sell its Chrome web browser, and that’s good news for everyone.

Trending Products